A/B testing & Usability testing: Better together

How to join two complimentary methods for product development

A/B testing is a data-driven way to evaluate design variations, but what happens before testing? How do we identify design variants worth exploring?

This article examines how small-sample usability tests serve as a perfect complement to A/B tests. By the end, you’ll understand how small-sample usability tests can create better variations for A/B tests and how to incorporate them into a programmatic research practice.

What you need to know about A/B Testing

A/B testing, sometimes called split testing, is a quantitative method for evaluating design variations against each other on a live website or app. While powerful, they’re not particularly common practice for UXRs [1] [2]. If you’re unfamiliar with A/B tests, here’s a quick primer:

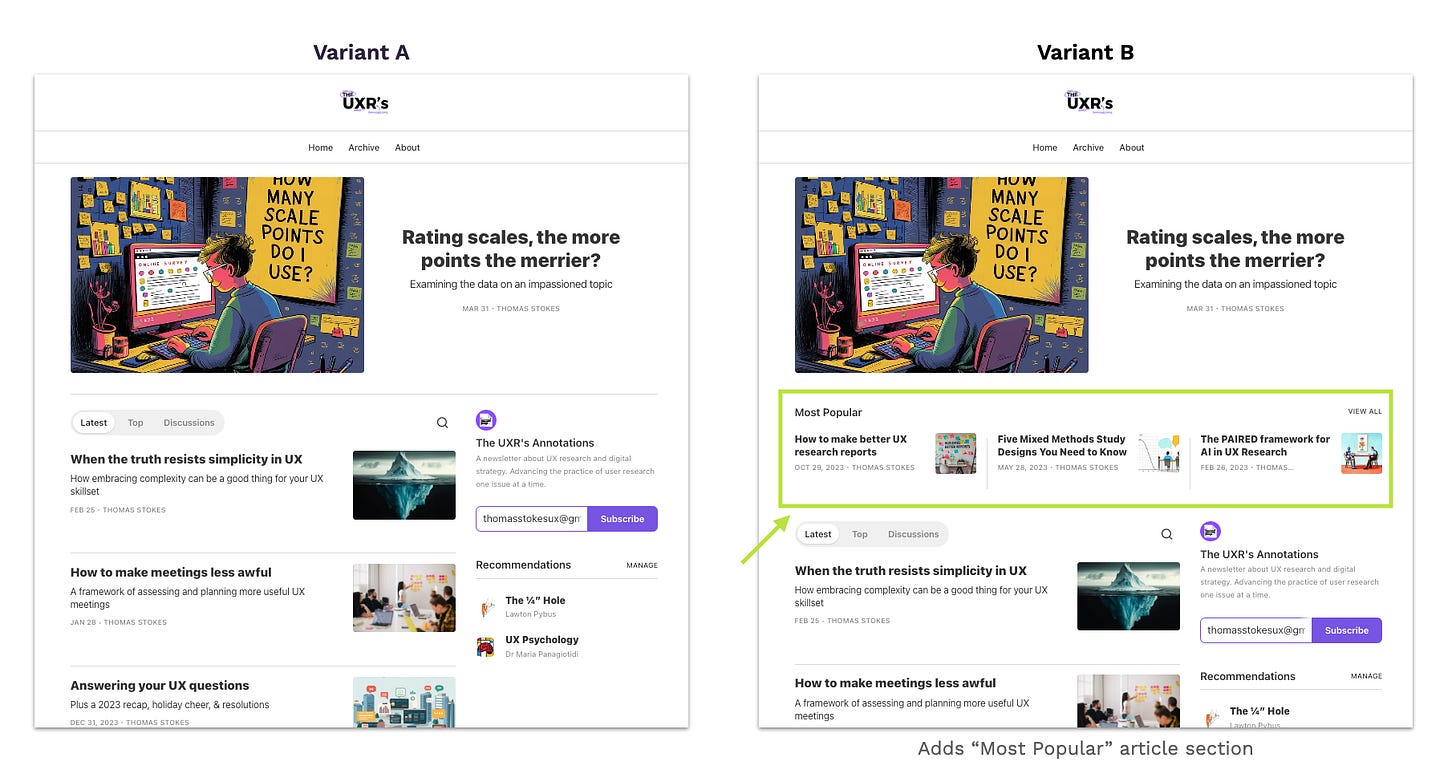

Variant A commonly refers to the current design, while Variant B is modified with a change hypothesized to improve the user experience and/or KPIs.

Users are randomly assigned to Variant A or B using an identifier (e.g., cookies, IP addresses, user IDs, etc.) so that roughly half of the website traffic sees each.

The test duration (days, weeks, etc.) is determined using historical website traffic volume and statistical sample size calculations.

On-site analytics measure user behaviors for the duration of the test.

After the test, the researcher performs significance testing to determine if the modifications in Variant B produced a positive result.

For (a fictitious) example, imagine I wanted to increase the proportion of my homepage visitors who click to read an article. I might hypothesize that featuring my “best” articles is a good idea and implement a new section on the homepage displaying my most popular articles. I could compare this new design (Variant B) against the original (Variant A) through a test that randomly assigns users and measures the proportion who click (CTR) an article. After some time, I can analyze the data to determine whether featuring popular articles on the homepage increases CTR for new visitors.

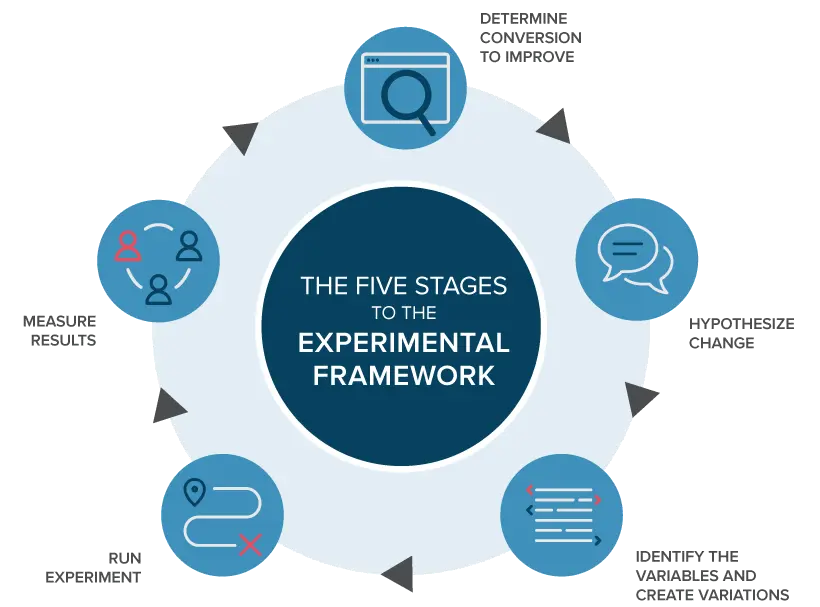

As a method, A/B testing’s strength is its ability to support data-driven decisions; you remove the guesswork and objectively determine which design variation performed better on a (set of) specific KPI(s). With a focus on analytics and quantitative data, A/B testing also allows researchers to quantify the impact of design variations, which can help to support the case for changes. Finally, A/B testing can fit into an iterative, ongoing process with continual testing of new variations that gradually refine a design based on user data.

But, like any method, A/B tests aren’t without their limitations. First, A/B tests generally require a large sample size for reliable results. Without a sufficient sample size, tests can lead to inconclusive data. For some sites with low-traffic volume, A/B tests may take several months. Second, A/B testing requires resources (e.g., development support, someone with statistics skills, data analytics tools, etc.) that some groups may not have. Third, and most importantly, A/B tests have very little descriptive power; in other words, A/B tests don’t offer much information to help you understand the reason behind the results. Further, the outcome of one A/B test is a poor guide for what variations should be tested in subsequent A/B tests.

That is where usability testing can come in.

Usability tests give us variants worth testing

Usability testing helps to balance out the biggest drawback of A/B tests. While A/B tests are extremely limited in their explanatory power; small-sample, qualitative usability tests are fantastic at giving us an understanding of users’ behaviors, motivations, and attitudes. Research practices can leverage the relative strengths (qualitative explanatory power vs. quantitative KPI-driven decision-making) of these methods by using an alternating sequence where observing users in a usability test informs the variants of an A/B test.

In a typical A/B testing process, a team would identify a lagging metric and hypothesize changes to the design that could improve it. At this stage, many turn to brainstorming to come up with design changes, but design modifications without a clear direction based on user needs can result in unfocused, aimless variants. Instead, these hypotheses and design changes are best when driven by actual user observation; this is where usability tests come in.

Returning to the previous example, let’s imagine I noticed a high bounce rate from new visitors to my homepage. I could set up a usability study with folks who aren’t regular readers of my newsletter to investigate the issue and observe what their first-time experiences are like. In these sessions, I might notice that they take a while to choose an article that is interesting to them. So, I might hypothesize this is the cause of the high bounce rate. Based on that hypothesis, I might decide to A/B test a new version of my homepage where I highlight my most popular articles in a featured section. During the A/B test, I might measure CTR and time-to-click to assess whether the revised design makes it easier for new visitors to identify an article they want to read.

Engaging users in usability tests ensures that the variants of an A/B test are worthwhile and prompted by expressed (or observed) user needs.

A programmatic approach

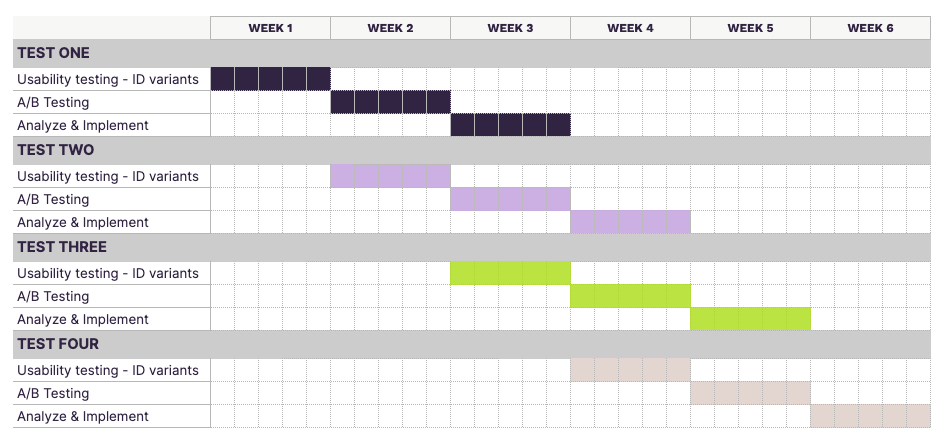

While usability testing can improve single A/B testing projects, the same approach can be extrapolated to create a continual process for iterative design changes. By stacking multiple tests in parallel, this sequence of usability tests and A/B tests creates a rolling sequential mixed methods research program. I’ve had experience setting this up with a few teams, and the general pattern each project follows is:

Dedicate time to usability tests at the start of each project to develop hypotheses and design variants, then…

Proceed to perform the A/B test, and finally…

Analyze the results and decide which variant ought to be implemented moving forward.

The final trick to setting up a program like this is to offset the timing of each project (i.e., when project #1 moves into A/B testing, project #2 kicks off usability testing). By offsetting these three phases of activities, you can have three projects ongoing at any time: one project in usability testing/variant generation, one project in live A/B testing, and one project in final analysis & decision-making.

The bottom line

A/B tests, while powerful for measuring the relative performance of design variations, offer limited explanatory power. They rarely explain why certain results occurred and cannot suggest what design variants we should test next. Small-sample usability tests serve as the perfect complement to A/B tests; they provide rich qualitative data about user behavior and motivation.

By combining these methods, researchers can leverage the strengths of each. Usability tests can be conducted at the start of each project to identify meaningful areas of improvement that can be assessed quantitatively through A/B testing. A research practice can implement this into a systematic mixed methods program for continual, iterative improvements to an experience.